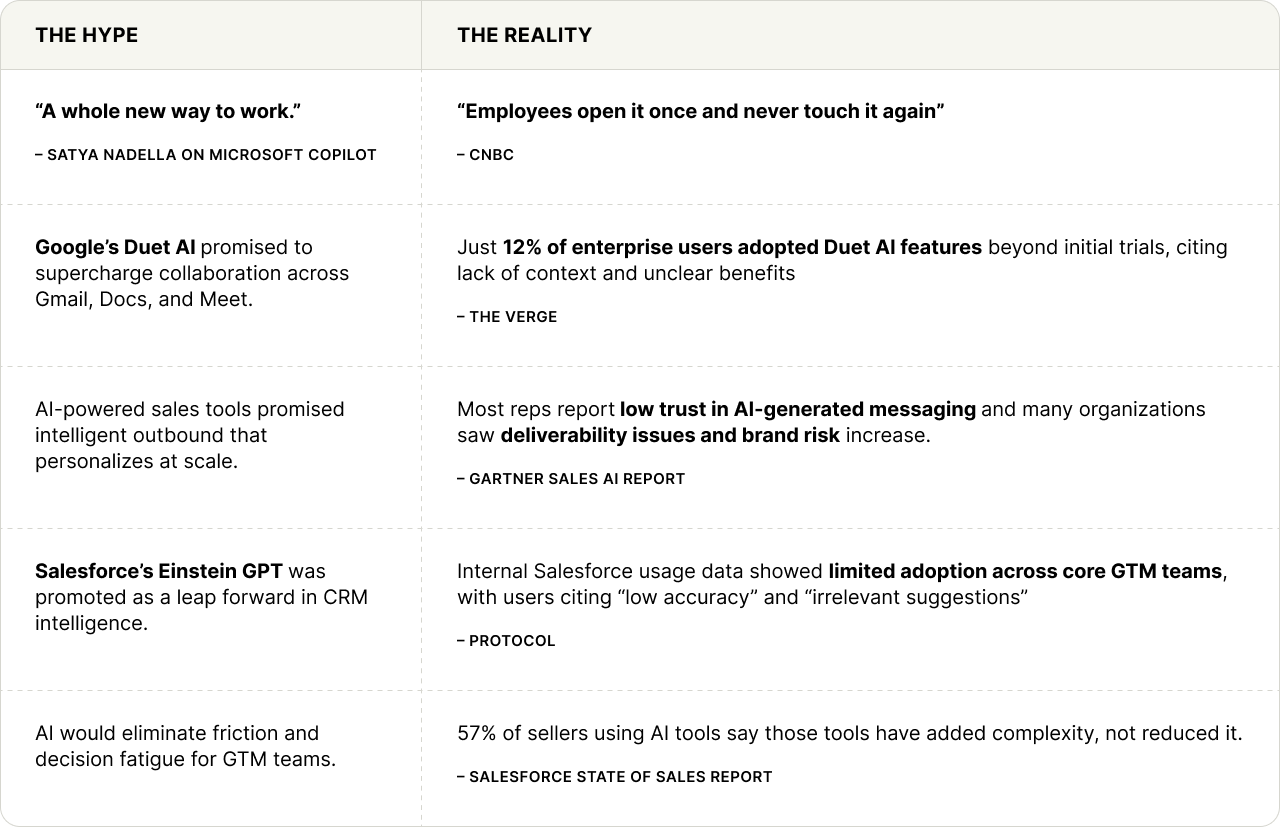

The Promise Was Big. The Reality? Not So Much.

Over the last 18 months, “AI” has dominated headlines and boardrooms, from Claude and ChatGPT to Cursor, vibe coding, and every new agent demo that promised to change how work gets done. It was supposed to unlock a new generation of sales productivity, personalized engagement, and go-to-market (GTM) efficiency.

Revenue leaders were promised that AI would change everything. Reps would spend less time searching for content, or in the most extreme pitches, you would not need to hire another SDR, and maybe you could even “replace” whole parts of the funnel with automation. Enablement would be handled automatically. Messaging would stay fresh and compliant. Customer Success would manage more accounts with more impact. The whole revenue engine would run faster, leaner, smarter, almost like it was all just code and you could type “grow 100% year over year” and watch it happen.

But fast forward to today, and a different picture is emerging.

Most teams have not seen the promised transformation. AI rollouts have underdelivered. Tools get added, but results stay flat, and in many orgs the cost to acquire new customers has stayed flat or increased. Adoption lags, usage drops off, and leadership is left wondering what all the hype was about.

You’ve been sold a lie about AI tools.

Not in bad faith, but because the market confused retrieval with reasoning, and demo-stage performance with real-world enablement. It turned out that slapping AI on top of your stack does not make the stack smarter, and in revenue work where timing, context, and accuracy matter, a wrong suggestion is worse than no suggestion at all.

One caveat up front: “AI” is not one thing. It is a catch-all label that includes copilots, chat interfaces, automation tools, outbound generators, call summarizers, CRM assistants, and knowledge search products, all with different strengths and failure modes. This post is about the gap between the promise of AI-driven efficiency for GTM teams and what most teams have actually experienced, and it zooms in on one core category where the gap is especially visible: AI that claims to make your internal knowledge usable in the moments that matter.

The AI Era Promised Efficiency. But Most Revenue Teams Got Noise

Despite rapid adoption, most AI tools are not making a measurable difference in productivity. According to a 2023 McKinsey survey, nearly 40% of companies had adopted some form of generative AI, yet only about 11% reported any material productivity gains from that adoption.

GitHub Copilot, one of the most visible AI launches, showed similar cracks. A 2023 report from Gartner found that less than 10% of developers use it daily, and most cited issues with context, trust, and quality of suggestions as barriers to regular use.

And in go-to-market teams, the problem is even more acute.

G2’s 2024 State of Software report revealed that AI adoption across sales and marketing stacks has spiked 77% year-over-year, but only 19% of teams say AI has significantly improved outcomes like conversion rates or win rates. Instead, most report marginal benefits, or none at all.

So what happened?

The tools got smarter on paper, but they were not built for how GTM teams actually work. They retrieved data, but not answers, tactics, or deal strategy. They pushed content, but they could not reason through when, why, or whether it was safe to use, and in many organizations, a meaningful percentage of users never really learned how to use these tools well enough to trust them in live customer moments.

In theory, AI was supposed to be your top seller’s brain at scale. In reality? It became just another tab your team ignores.

Hype vs. Reality. The Gap in Enterprise AI Adoption

GTM is Messy by Design. And AI Needs to Understand That.

A common narrative has taken hold in the AI tooling space—when these systems fall short of expectations, the blame is often placed squarely on your data. Vendors who once promised that AI would eliminate friction across the revenue engine now quietly suggest that it’s your knowledge base, your content hygiene, or your lack of tagging that’s holding things back. The story goes: the tool is smart, your inputs just aren’t good enough.

But this version of the story leaves out something essential. Your data is not broken. It is distributed, inconsistent, and constantly evolving, which is to say, it reflects the normal operating conditions of any modern go-to-market organization. Assets live across systems. Messaging changes faster than documentation can keep up. Quotes, slides, tickets, and updates exist in different formats and lifecycles. That isn’t failure; it’s a natural byproduct of scale, complexity, and speed. Any tool that treats that reality as a problem to be fixed is fundamentally misunderstanding the environment it's meant to support.

The real issue is that most AI systems were never designed to engage with this type of information in a meaningful way. They can ingest “unstructured” content like docs and transcripts, but they cannot reliably infer truth, approval status, strategic intent, or situational context. They can appear to handle messiness by summarizing and rephrasing it, but that is not the same as understanding what it means, what changed, what is valid, and what is risky. Systems built on shallow search methods or unsupervised embeddings might return content that appears connected to a query, but they cannot determine whether that content is still current, whether it’s contextually appropriate, or whether it supports the strategic intent of the task at hand.

This is compounded by the fact that many of these tools operate in isolation, functioning more like disconnected utilities than integrated systems. They pull information without understanding how it fits into broader workflows. They provide results without helping the user decide which content to trust, which version to use, or how different pieces relate to each other. Instead of reducing decision-making overhead, they add to it, placing the burden of interpretation back on the human and offering little in return beyond faster access to the same fragmented assets.

AI should not be a system that demands perfect data. It should be a system that can interpret imperfect data in practical, useful ways. It should complement your existing workflows and systems, drawing on the materials you already have rather than asking you to clean, tag, and reformat everything for the tool to work. A truly effective AI system does not expect a sanitized environment; it recognizes that value exists in the chaos and builds its logic accordingly.

The role of AI in revenue organizations is not to eliminate messiness, but to make sense of it. Real enablement doesn’t come from enforcing structure at the expense of speed and agility—it comes from building intelligence that can navigate ambiguity, resolve contradictions, and prioritize what matters most in the moment of action. That level of intelligence is only possible when the system is built on a foundation that reflects how your business actually works, not how a model would prefer it to.

When AI understands not just the content in front of it, but the context around it, what the asset was created for, who it was meant to serve, when it was last updated, and how it relates to the rest of your GTM motion, it becomes something fundamentally more valuable. It becomes an extension of your team’s thinking, not just another system they have to manage.

Why Revenue Leaders Are Stuck in the Gap Between Hype and Results

CROs and revenue leaders today are navigating an unusual kind of pressure. There’s a growing expectation—from boards, from investors, and from internal stakeholders—that AI will be the lever that unlocks greater efficiency, better performance, and leaner operations. The narrative is compelling, and the urgency is real. Every vendor pitch reinforces it, promising that AI will transform prospecting, compress sales cycles, and remove friction from the entire revenue engine.

As a result, AI tools are being deployed across nearly every part of the GTM stack, from outbound automation and sales enablement to customer success and onboarding. But while the promise sounds strategic, the tools themselves have remained largely tactical. They generate copy, retrieve assets, summarize notes, and draft emails, but they do not make decisions, apply context, or understand tradeoffs, which are the abilities that matter when a real deal is on the line.

Instead of creating leverage, these tools often introduce more overhead. Reps are asked to interpret AI-generated outputs, cross-check suggestions against internal guidance, and confirm whether content is current or even usable. In many cases, the task of reviewing the AI’s work becomes just another step in an already overloaded workflow. According to Salesforce’s 2023 State of Sales report, a majority of sellers using AI tools say the experience has added complexity, not reduced it.

The result is not transformation. It’s fatigue. AI was sold as the solution to GTM chaos, but most systems simply reflect it, without offering a better way through.

Real AI Value Comes From Understanding, Not Just Access

If an AI system cannot model how your business actually operates, including how products evolve, how customers and segments differ, how features roll out, and how compliance rules and messaging change over time, it cannot help teams make better decisions when it counts. It may be able to search across systems or surface information that looks relevant, but it lacks the ability to support real execution because it doesn’t understand context.

That’s where most “AI for GTM” tools fall short. They stop at access. They can suggest content, but they can’t prioritize what’s appropriate. They can automate retrieval, but they can’t protect teams from outdated, incorrect, or risky information. They can move fast, but they can’t reason about how different pieces of knowledge relate to one another.

What revenue teams actually need is an AI layer that understands nuance: that a quote from last year may no longer apply, that a new product release shifts positioning, or that certain proof points are only approved for specific verticals, regions, or customer types. Without this level of logic and representation, AI systems don’t become a reliable decision-making partner, it becomes an expensive search engines dressed up as intelligence.

How Mash Approaches AI Differently

At Mash, we believe real enablement starts with context, which is why we built our system not to simply index content, but to model knowledge in a way that reflects how revenue teams actually work. Decks, documents, calls, tickets, and product changelogs aren’t interchangeable blobs of text, they’re fundamentally different types of data with distinct structures, purposes, and lifespans, and treating them as such is critical to making them useful in real customer moments.

Instead of chunking information into generic pieces, Mash represents it. A customer quote isn’t just text; it’s tied to a specific product, feature, persona, timestamp, and permission level. A deck isn’t just a file; it’s part of an evolving narrative that changes as GTM messaging and positioning change. A product update doesn’t live in isolation; it’s connected to support tickets, customer outcomes, and downstream positioning shifts. Sales calls aren’t just transcripts; they’re parsed for signals that can reinforce or conflict with other insights across the system.

The result isn’t AI that simply retrieves information faster, but AI that reasons more like your best rep, helping every team member make better decisions without hours of internal searching, Slack interruptions, or manual judgment calls. And because Mash is built to understand context rather than just surface content: adoption stays high, trust increases, and outcomes improve.

A Final Note to Revenue Leaders: It’s Time to Ask more from your AI

You’ve likely been told that AI is the future of sales and marketing, and that’s true, but only when AI reflects the reality of how revenue teams actually operate. For it to be genuinely useful, it has to model complex workflows, reason across messy and distributed systems, and understand nuance: what’s safe to send, what’s most relevant in a given moment, and what information is actually likely to help move a deal forward (and not just what happens to match the right keywords.)

When AI tools simply surface content faster while pushing the responsibility for judgment, validation, and prioritization back onto the team, they don’t deliver enablement. They deliver noise. The burden of deciding what’s correct, current, and appropriate still falls on the rep or SE, often in the middle of a live customer conversation.

At Mash, we’re building the system revenue teams thought they were getting the first time around. Not AI that just finds content, but AI that helps teams understand it, apply it, and win with it.